I believe the world will be a better place for the use of AI especially should AGI (artificial general intelligence) be reached, but it is not all roses...

Josip Rozman

Consultant

Artificial Intelligence in the Big and Scary Real World

There is no denying Artificial Intelligence (AI) has been (and still is) a world-changing technology, but we tend to focus only on the positives.

As a disclaimer, I am a firm believer in AI, despite the negatives presented in this blog. While models can be used in ways deemed unethical such as deep fakes, social scoring, or face recognition, I will talk a bit about the fail cases of models which had no initial ill intent.

As the first example, I would like to mention is Microsoft’s Tay chat bot. The goal was to create an AI to mimic the conversation of a 19-year-old individual, while the bot would learn through interaction with other individuals via Twitter. While the bot started out as playful it soon turned racist and sexist and was shut down 16 hours after release by Microsoft.

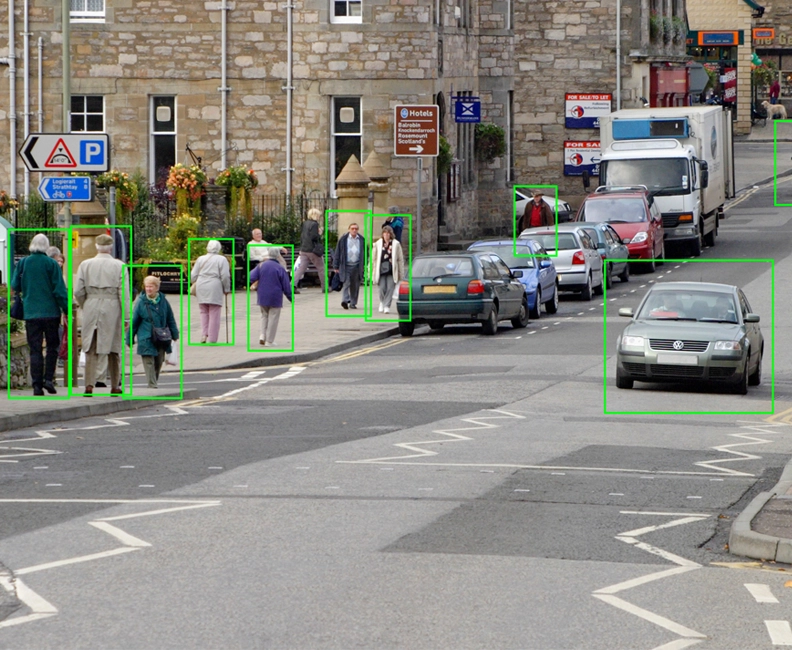

The second example would be the fatal Tesla crash a few years back. This was the first known fatality caused by misclassifying a tractor-trailer as brightly lit sky background resulting in no brakes applied. Tesla reports on average one accident every 4.19 million miles driven, and this figure is set to improve with further optimisation. For comparison, NHTSA’s data shows that in the United States there is an automobile crash every 484,000 miles. While the AI is outperforming human drivers people still have issues trusting it, partly due to overestimating human abilities, and partly because the failure cases are not typical for human drivers.

One thing in common between these two failures is that the training data did not match the data observed during deployment and the model failed to respond to it correctly.

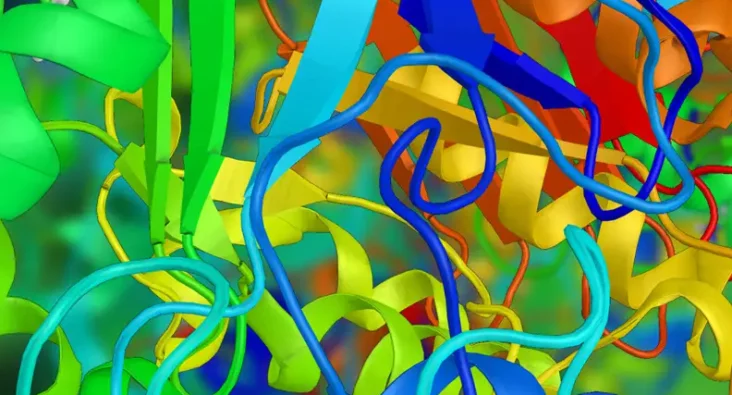

This highlights the importance of understanding your deployment environment in a sense of what might go wrong (prepare for the unexpected), but also one of the large flaws of the current trajectory of machine learning research. The size of machine learning models increases year by year requiring more and more computational power in terms of GPUs and TPUs to train. A consequence of the model growth is large power consumptions on training, but also due to the black box nature of these models they cannot be calibrated in the new environment without additional training data. We explored the use of a Type-2 Fuzzy Logic alternative to the black box models and our work was presented at the FUZZ-IEEE 2021 conference.

One more aspect worth discussing would be the role of Governments to regulate AI and reduce risk. The answers to this question are varied, and largely influenced by culture. Some applications of AI such as “social scoring systems” are considered completely unacceptable in the West, but are considered normal in the East. Should self-driving cars be so heavily scrutinized for every accident they are involved in, or should we focus on the positive aspects of the technology (almost a 10 times reduction in the number of accidents per mile)? Government regulations tend to focus on the negative aspect, but on the other hand, shouldn’t it be our moral responsibility to implement the technology if it can save lives? While regulation gives more credibility to the field, resulting in more investment, it also slows down the adoption of technology.

To conclude I believe the world will be a better place for the use of AI especially should AGI (artificial general intelligence) be reached, but it is not all roses. It is important to have a good grasp of the problem space and where it will be deployed, as the expenses of failure can be high (in terms of life, money and credibility) and could lead to a new AI winter.

What are your thoughts? Do the benefits of the use of black-box AI outshine the flaws, or should we look at explainable alternatives even at the cost of performance?

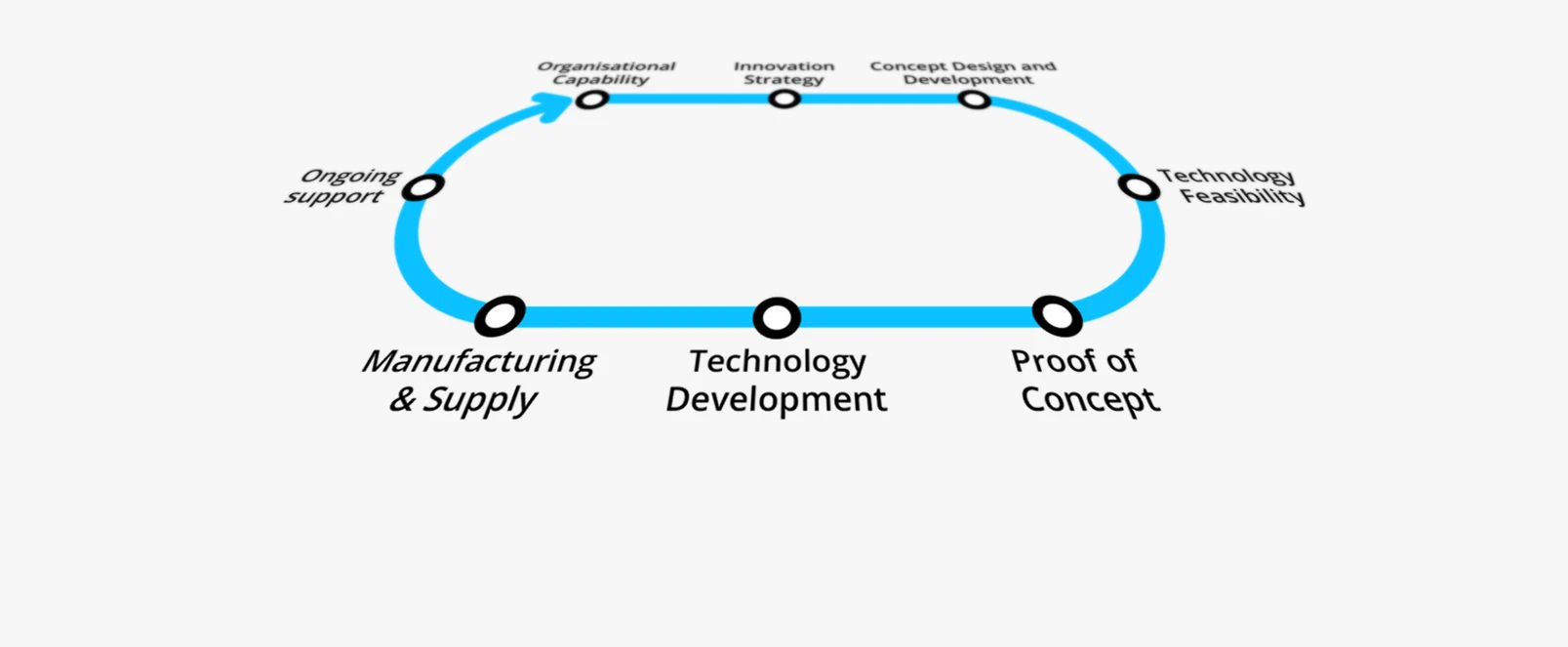

Technology Platforms

Plextek's 'white-label' technology platforms allow you to accelerate product development, streamline efficiencies, and access our extensive R&D expertise to suit your project needs.

-

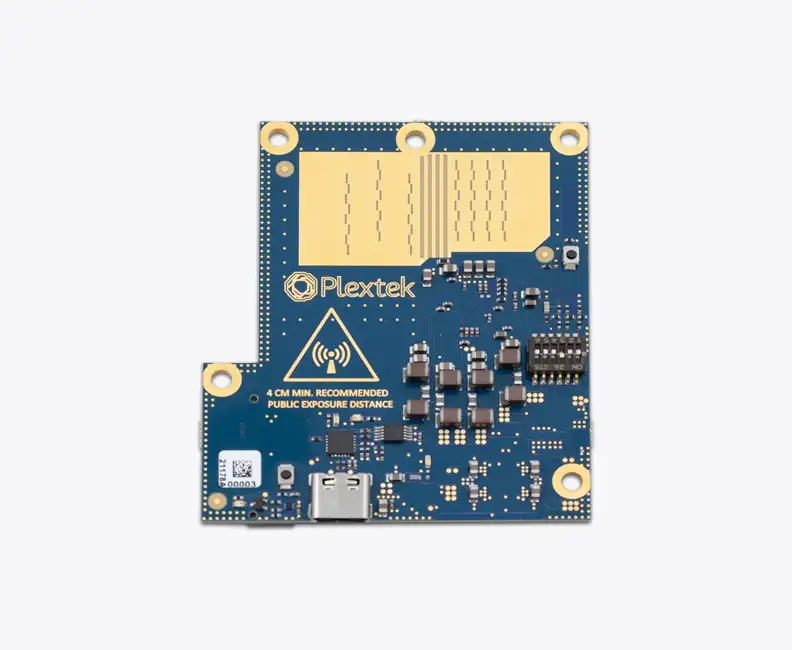

01 Configurable mmWave Radar ModuleConfigurable mmWave Radar Module

Plextek’s PLX-T60 platform enables rapid development and deployment of custom mmWave radar solutions at scale and pace

-

02 Configurable IoT FrameworkConfigurable IoT Framework

Plextek’s IoT framework enables rapid development and deployment of custom IoT solutions, particularly those requiring extended operation on battery power

-

03 Ubiquitous RadarUbiquitous Radar

Plextek's Ubiquitous Radar will detect returns from many directions simultaneously and accurately, differentiating between drones and birds, and even determining the size and type of drone