Signal Processing & Data Analytics

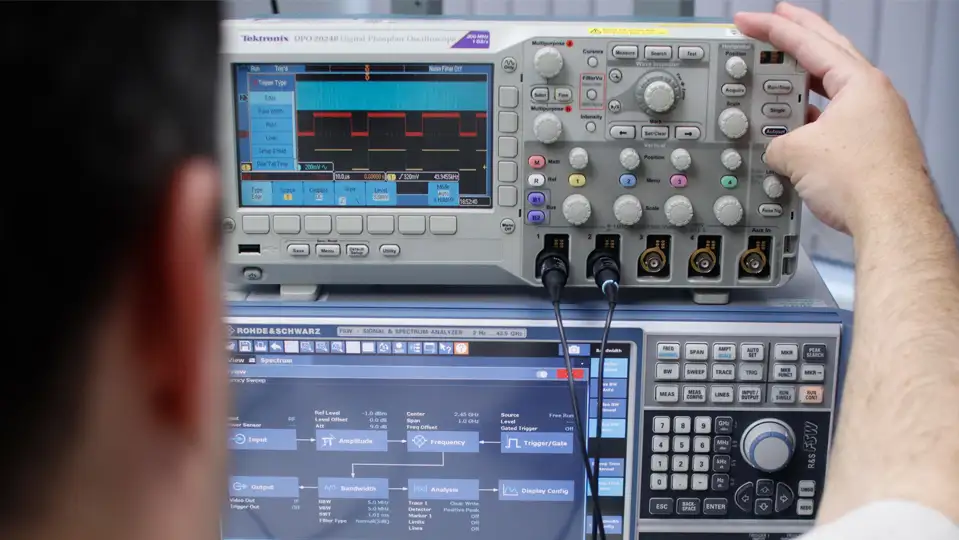

Navigating the complex world of radio waves for effective, reliable communication

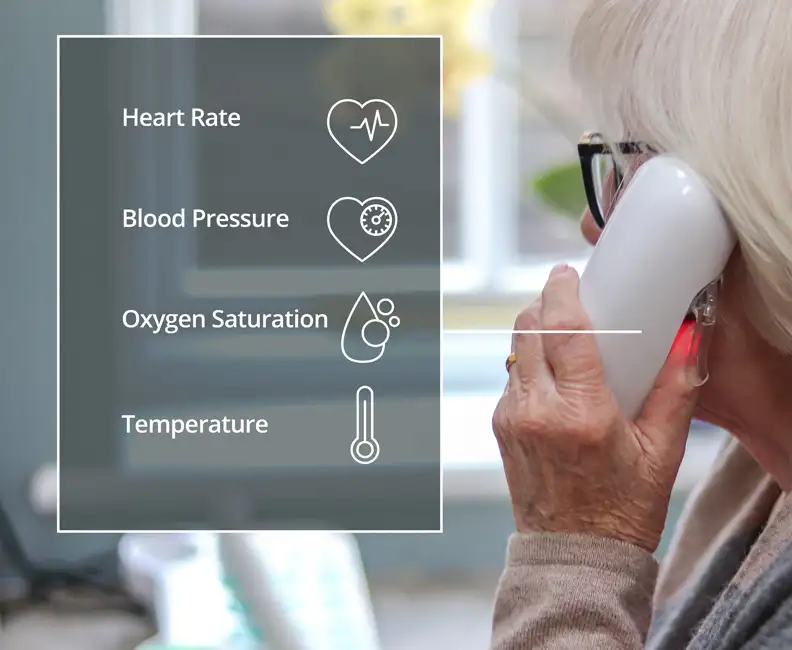

Signal processing reveals all kinds of valuable information hidden in data from a variety of sources – from audio signals, video and images to sensor systems.

It might involve collecting information from the sensors in a fitness tracker and reporting back on sleep patterns, for example, or converting the information from ultrasound, radar and cameras on an autonomous vehicle into data needed to control navigation.

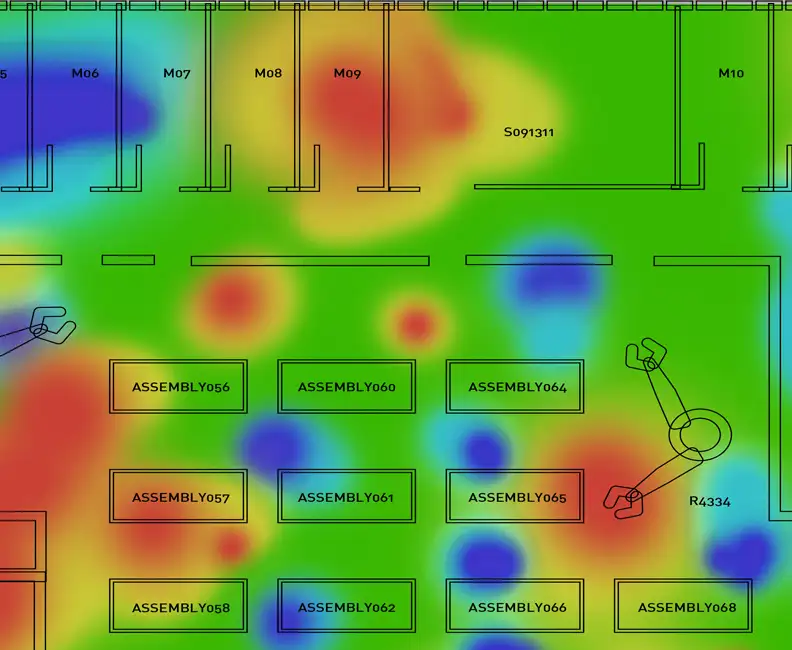

In a factory setting, signal processing is typically used to collect and analyse the data from sensors attached to production line machines to detect when the equipment needs remedial maintenance work.

Signal processing techniques also remove unwanted disturbances, outliers and artifacts from ‘noisy’ real-word data, resulting in cleaner and more reliable datasets – essential for accurate modelling, predictions and other advanced data analysis tasks.

Real-world challenges

Key skills

-

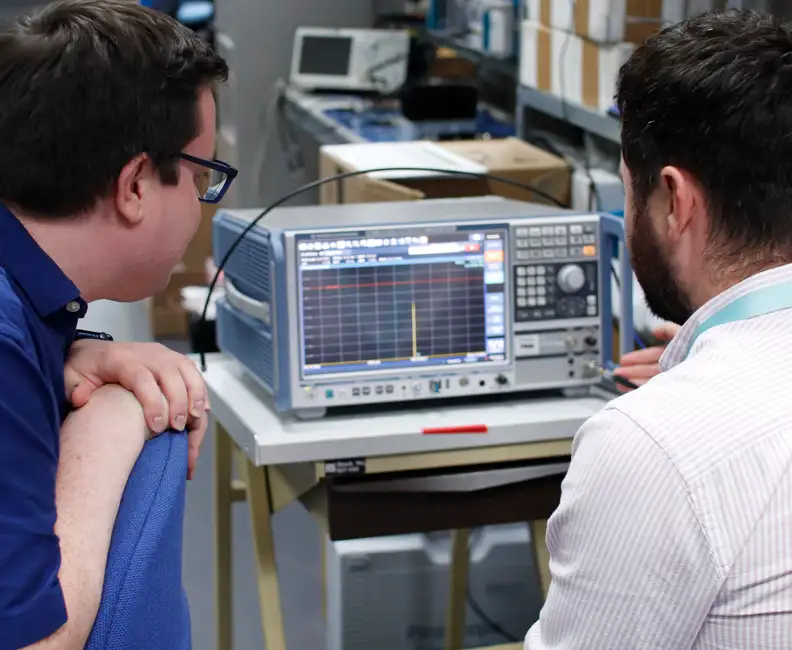

Expertise in real-time system design

Specialising in the design of systems, such as radar, that require non-stop operation over extensive periods.

-

Reliability engineering

Ensuring high system reliability for critical applications, essential in fields such as autonomous vehicle navigation and industrial monitoring.

-

Expenditure optimisation

Delivering complex signal processing solutions within strict budgetary limits, optimising expenditure without sacrificing performance.

-

Intelligence at the edge

The system understands its surroundings, allowing autonomous operation where communication to central processing is limited or unavailable.

-

Python code implementation

Employing Python for data processing pipelines, providing practical code examples for the software development community.

-

Signal interpretation

Translating complex raw data from varied sources into actionable insights, crucial for making informed decisions.

-

Noise filtering techniques

Sophisticated signal processing methods to minimise unwanted disturbances and artefacts, resulting in cleaner and more dependable datasets.

-

Predictive maintenance

Using signal analysis to forecast when equipment requires maintenance, significantly reducing downtime and operational expenses by detecting issues before they cause failure.

-

Low size, weight and power (SWAP) operation

Obtaining optimal processing performance per watt when power budgets are highly constrained.

What sets us apart when it comes to signal processing & data analytics?

Our signal processing & data analytics expertise enables us to transform complex data into actionable insights with precision and clarity. Our areas of expertise include:

- Clean datasets

- Continuous operation

- Data modelling

- Data processing pipeline

- Image processing

- Modelling and predictions

- Noise reduction

- Non-expert operation

- Predictive maintenance

- Python code programming

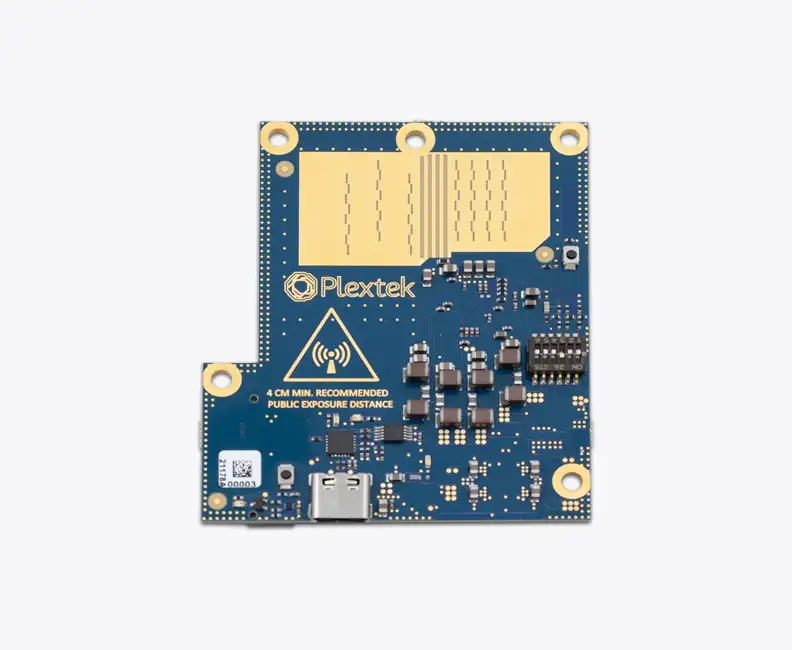

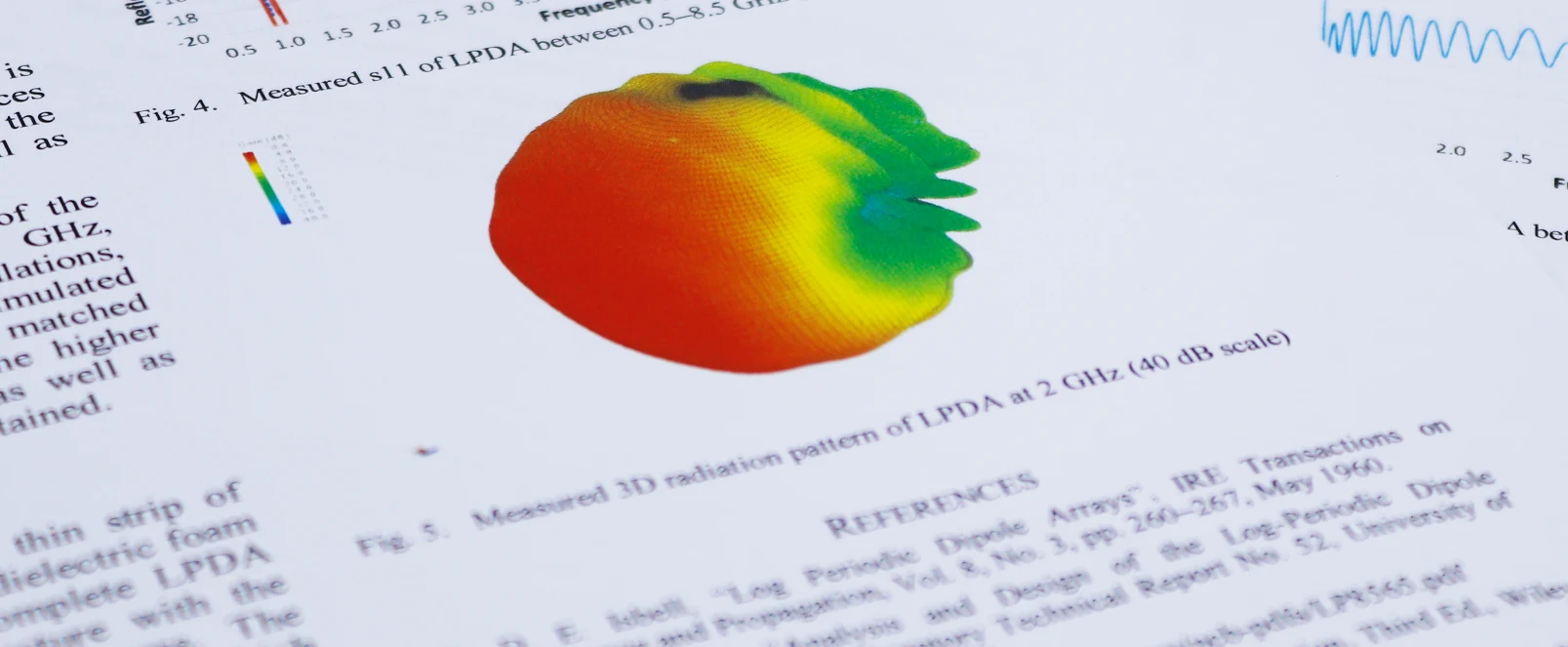

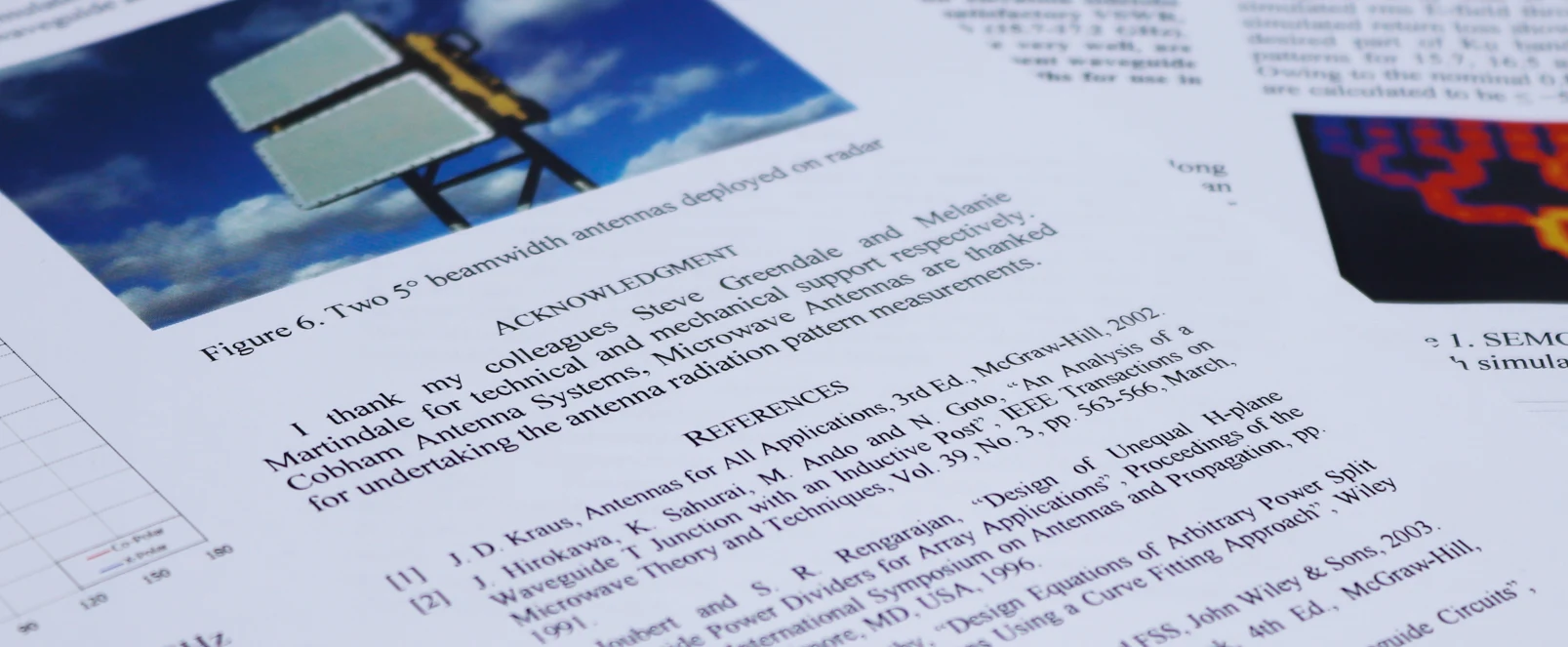

- Radar systems

- Real-time data capture

- Reliability

- Low SWAP

- Sensor systems

- Signal extraction

- Video analysis