The Challenge

Mobile Ad-hoc Networks (MANETs) present a highly dynamic and complex environment for data routing. Traditional routing protocols like Ad-Hoc On-Demand Distance Vector (AODV), a reactive routing protocol suitable for dynamic network environments, and Optimized Link State Routing (OLSR), a proactive routing protocol, can struggle to adapt quickly and efficiently in scenarios characterized by rapidly changing nodes, fluctuating links, or networks with a large number of nodes. Ensuring reliable, real-time packet delivery requires a routing solution that can understand and respond to continually shifting network topologies and dynamics.

The Approach

Plextek developed a Deep Reinforcement Learning (DRL) algorithm based on Double Deep Q Networks (DDQN) to train a packet routing agent for a Mobile Ad-hoc Network (MANET), utilizing Graph Neural Networks (GNNs) to derive the agent’s policy.

Reinforcement Learning (RL) is a machine learning paradigm in which an agent learns to make decisions by interacting with its environment and receiving reward signals. By balancing exploration and exploitation, the agent iteratively refines its strategy to maximize long-term rewards. This makes RL especially suitable for dynamic tasks like routing in MANETs, where rapid adaptation is essential.

By harnessing GNNs, the agent could effectively capture, interpret and predict the complex, dynamic structure of the network, learning to optimize packet routing decisions in real-time. The agent achieved near state-of-the-art performance in terms of packet delivery capability, rivalling established routing algorithms like AODV and OLSR. This outcome underscores the potential of combining DRL and GNNs for efficient, adaptive routing in MANETs, where traditional methods may struggle to adapt to rapidly changing network topologies.

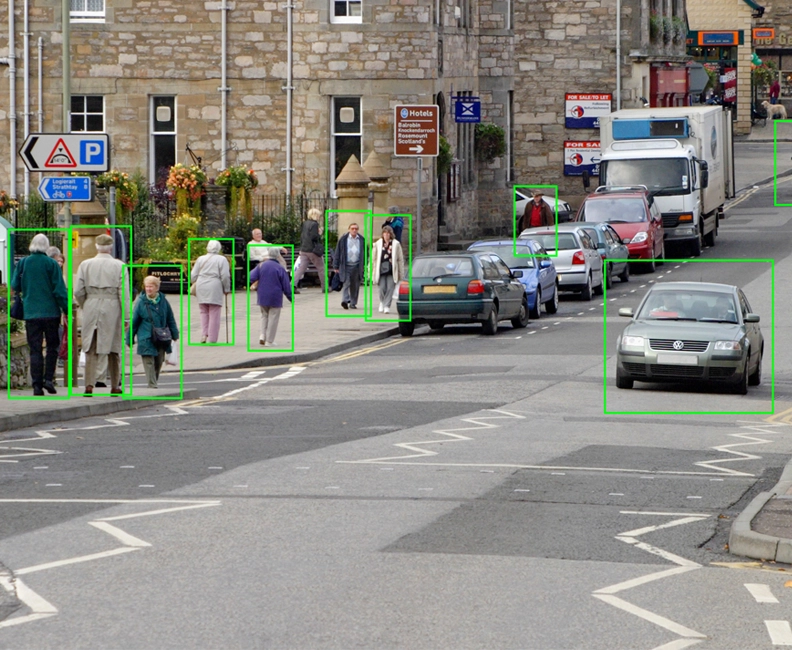

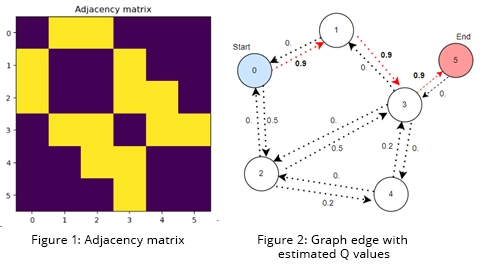

In the described setup, the MANET environment is conceptualized as a directed graph, where each node represents a vertex and each communication link between nodes represents an edge. This graph-based representation allows for the modelling of network dynamics and interactions in a structured and analysable form.

The adjacency matrix (Figure 1) is a critical tool in this graph-based representation, serving as the backbone for understanding the network’s topology. It not only outlines which nodes are directly connected but also supports the operational dynamics of the message passing algorithm.

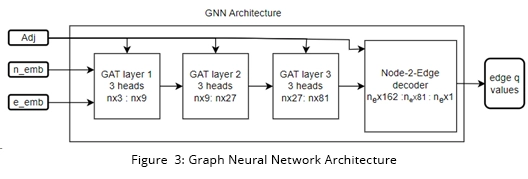

In the GNN architecture, incorporating layers corresponding to the maximum allowable hops optimizes spatial information capture, leading to enhanced network performance, reduced latency, and reliable packet routing. Through the use of advanced ML techniques, such as controlling the reward function, distributed routing, and network prediction, we can further enhance the network’s adaptability and efficiency.

The GNN’s advanced Graph Attention Layers (GAT) and Node-to-Edge decoder layer contribute to enhanced network adaptability, fault tolerance, and optimized routing decisions.

These advancements in ML-driven network architecture directly translate to improved operational effectiveness, user experience, and infrastructure robustness, securing a competitive advantage for our network

Please accept cookies in order to enable video functionality.

The Outcome

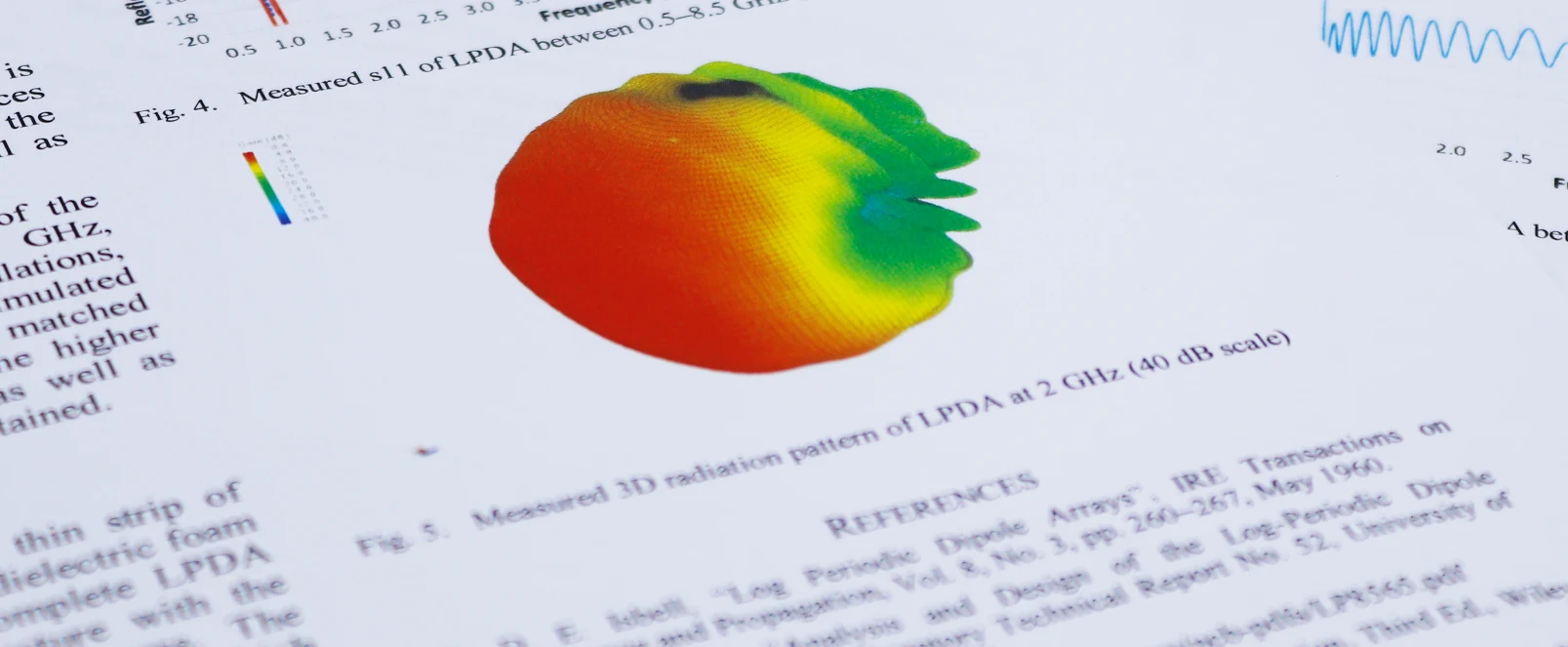

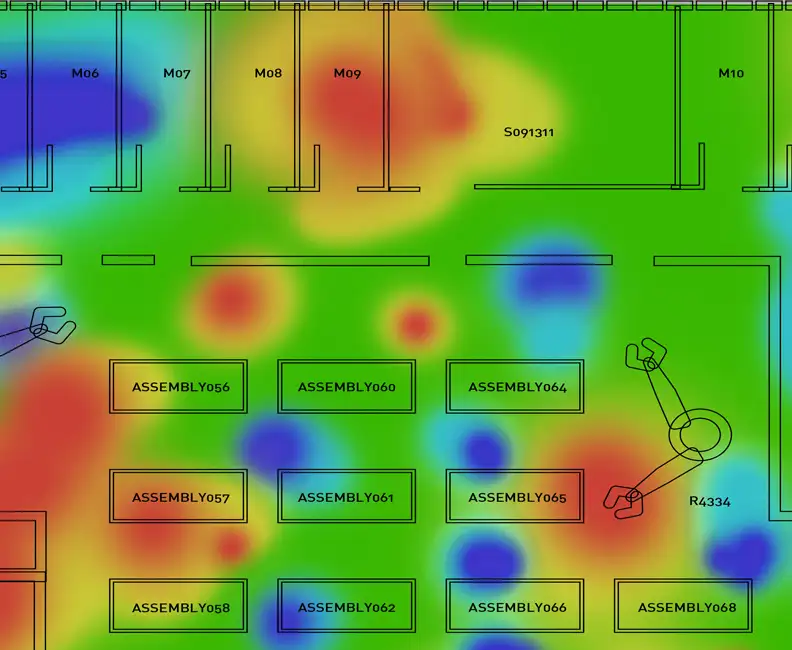

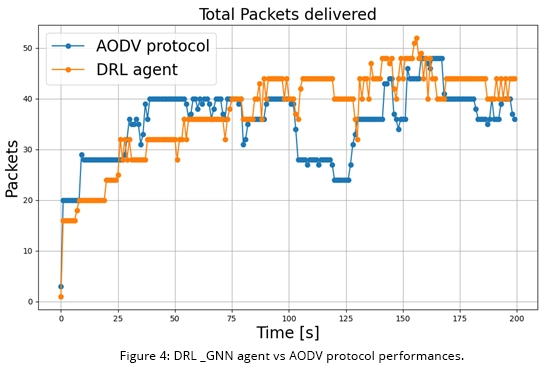

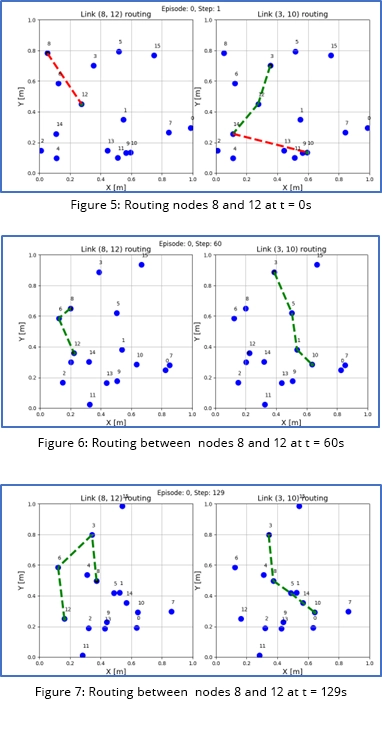

The GNN-based DDQN agent achieves performance comparable to a state-of-the-art mesh routing algorithm on network configurations similar to those it was trained on, as highlighted in Figure 4. When tested on randomly generated configurations, the agent demonstrates a robust level of generalization—largely due to the graph neural network’s ability to capture fundamental relationships between nodes. Figures 5, 6, and 7 provide snapshots of how the agent adapts its routing protocol in response to changing environmental conditions, consistently maintaining a reliable level of service. Additionally, employing machine learning approaches, particularly reinforcement learning, provides the flexibility to fine-tune the agent’s behaviour to suit diverse scenarios by modifying the reward schema during training.

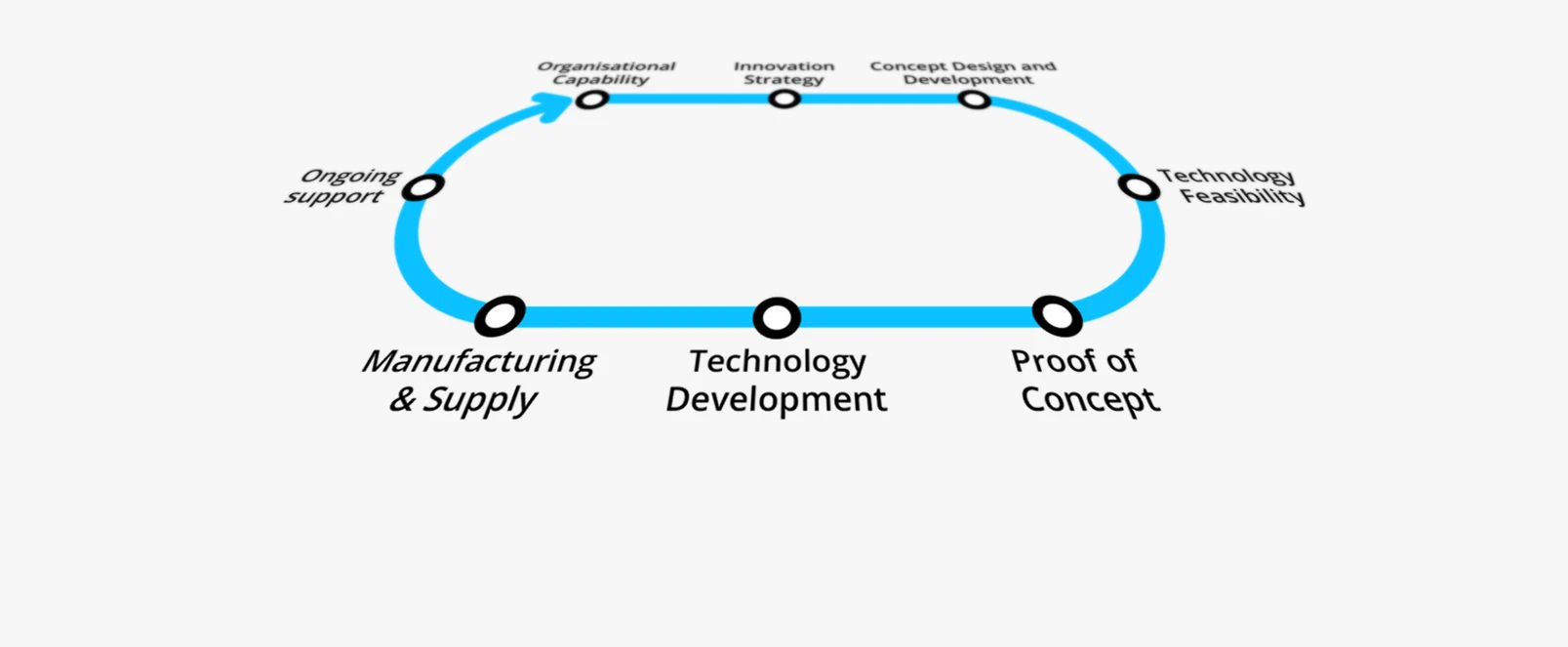

Ready to enhance network efficiency, scalability, and adaptability?

This advanced GNN-driven DDQN agent showcases not only a high level of performance but also a strong capability for generalization across different network configurations. The adaptability of the agent is vital for navigating real-world complexities, offering scalability and reliability in dynamic networking environments. Utilizing ML, including RL, empowers us to tailor the agent’s behaviour to specific requirements, ensuring optimal network performance and adaptability. If you’re looking to enhance network efficiency, scalability, and adaptability then get in touch with Plextek’s team to explore opportunities for integrating similar cutting-edge solutions into your network infrastructure.